Consistent, not congruent: input methods

-

Lately I've been noticing more discussions about fundamental design changes to Ubuntu Touch, Unity8, or convergent apps. I believe that these discussions should be allowed to flourish. Having them is a sign of a healthy community whose members are able to bounce ideas around in order to come to a better end result. To help frame the discussion and keep it respectful and realistic, we should continue to add to our design documentation. I'll be making a series of posts as I find the words to write them that we can later contribute to this documentation. I'm bringing them here first so we're able to discuss their content and end up with a better result.

Some of the recent conversations ignore a concept which took me a very long time to grasp, but is essential to the convergence experience: Consistent, not congruent.

This post was really triggered by an offhand comment in the UBports chatroom recently:

how did you even get gesture controls to work fluidly with a mouse?

This stems from the common misunderstanding that convergent interactions must be the same across all input methods and screen sizes. You might remember a rather recent project that blew up in a large company's face due to this misunderstanding. Windows 8 was widely regarded as a commercial failure that doomed Ballmer as CEO of Microsoft [1]. It tried to make the same interface suitable for all screen sizes and input methods.

(image source https://www.bleepingcomputer.com/tutorials/how-to-use-windows-8-start-screen/)Instead, a convergent operating environment should cater to the strengths of every platform it runs on without creating an entirely different experience. Each platform can have a different combination of input methods and screen sizes. Let's talk about input methods today...

Input methods

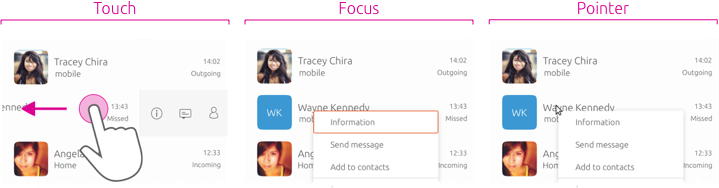

We all know the various methods which are generally used by people who are fully-able to operate a computer: A mouse, a touchpad, a keyboard, or a touch screen. Each can be used either in combination or in contrast. Each also has its own considerations:

- A mouse can be used to smoothly move a cursor on screen. Once the cursor has reached a control, two buttons are available to select the control or activate context actions. The mouse is a relative pointing device, meaning that it "allows the application of algorithms to transform the relationship between the location of the pointing device and the cursor."[2] In other words, the relationship between how you move the mouse and the results on-screen are not absolutely mapped.

- Fingers on a touch screen can make smooth swiping motions from any point on screen. They can also tap or press and hold on controls. A touch screen is an absolute pointing device, a "highly learned direct mapping between the [action] and the location on the screen that involves kinesthetic or proprioceptive cues from the body [...]"[2]. Translated to English, the result of an action is more directly mapped to the action than with a relative pointing device.

- Fingers on a touchpad can also make smooth swiping motions, however the touchpad is a relative pointing device. Users expect to be able to make smooth scrolling or zoom motions using the touchpad, however the primary action of moving the cursor is not necessarily a 1:1 action:result and there is a dedicated way to "Select", such as a pressing action.

- A keyboard is not a pointing device at all! Instead, certain keys on the keyboard may be pressed to focus and trigger controls. In our design documentation, the keyboard is regarded as a "Focus" input method.

That's a lot to take in, but it all adds up to a very short conclusion: interactions that feel natural for one input method feel alien to another. For example:

- Selection

- Pressing and holding on a stationary control for a second to select it feels natural on a touch screen. Clicking once feels natural on a mouse or touchpad.

- Tapping once on a stationary control to activate it feels natural on a touch screen. Clicking twice on a mouse or touchpad feels natural.

- Repeatedly performing swipe actions (such as to open the launcher, drawer, or app switcher in Ubuntu Touch) is difficult with a mouse or touchpad. Instead, the same actions may be triggered with mouse "pressure" (pushing the cursor against the side of the screen, which is impossible to do with touch) or a stationary button which triggers the action (like the BFB in the upper or lower left corner of Unity8).

- A way to linearly cycle through the available controls on the screen must be provided for keyboard or accessibility tool users. This requirement does not map to any other input method.

All of these different interactions feel like part of a whole, well-designed experience. For each input method, the user experience is internally consistent with other input methods and user expectations for their chosen method. So, we didn't get swipe gestures to work fluidly with a mouse. Instead, we made ways for the mouse to trigger the same action as a swipe gesture that is suited to its unique strengths.

[2] Comparison of Relative Versus Absolute Pointing Devices (PDF): https://www.cs.umd.edu/hcil/trs/2010-25/2010-25.pdf

[1] https://www.ft.com/content/330c8b8e-b66b-11e2-93ba-00144feabdc0#axzz2Saqo8ZXA -

Very nice.

Testing apps with

clickableon the desktop makes easy to see the focus input method using a keyboard (and how the developers could improve our apps to be aesily used with keyboard. And pops up some flaws we have: there's no way to flick a Flickable with only focus input method that I'm aware of.I just realized that tv remote controls are also «focus input methods». A very tricky way of using devices (specially for entertainment purposes).

-

@CiberSheep said in Consistent, not congruent: input methods:

there's no way to flick a Flickable with only focus input method that I'm aware of.

It depends on the interaction. Once one has focused a "flickable" (a ListView for example), the arrow keys should select items in the list, or scroll the view. Unfortunately, it also seems there are some visual issues in Qt/QML in this respect, as highlighting of active focus can be problematic. We definitely need some work there to make things nice.